Why Data Organization Must Come Before AI Strategy

“Don’t talk to me about ChatGPT if you haven’t connected your different data sources.”

That’s Jonathan Metrick, Partner at Sagard, on a recent podcast. It sounds dismissive. It’s not. It’s the most practical advice anyone in PE has given about AI this year.

Everyone’s racing to adopt AI. PE-backed companies are spending 86% more on technology and analytics projects than they were a year ago. The pressure to “have an AI strategy” is real—boards are asking, operating partners are pushing, and every conference deck has a slide on generative AI.

But here’s what’s actually happening on the ground: most companies aren’t ready. Not because they lack budget or tools. Because they skipped the unglamorous prerequisite that determines whether any of this works.

Sean Mooney, founder of BluWave and a 20-year PE veteran, puts it bluntly: “This is the unsexy part of AI. If you don’t have your data organized, structured, clean, and you don’t invest in keeping it that way—because it will lose calibration—you really can’t get the most out of any of these tools. It’ll be garbage in, garbage out.”

The Gap Between AI Plans and Data Reality

I’ve walked into PE-backed companies where leadership couldn’t tell me if performance was improving or declining. Finance had one answer. Operations had another. Nobody could definitively say what was true.

That’s not an AI problem. That’s a foundation problem.

Here’s the pattern:

The board asks: “What’s our AI strategy?”

The 100-day plan says: “Implement AI-powered analytics for better decision-making.”

Month 6 reality: The AI pilot is running. It’s producing outputs. Nobody trusts them.

Why? Because the AI is trained on data that was never organized, never validated, never maintained. It’s confidently producing insights from a foundation of chaos.

Dave Shephard, Director of Portfolio Operations at Rainier Partners, frames it clearly: “You need data before AI. Without clean, structured data, AI is just expensive guessing.”

This isn’t a technology limitation. It’s a sequencing problem. Companies are implementing AI before they’ve answered more basic questions:

- What are we actually measuring?

- Do our definitions match across departments?

- Can we trust what’s in our systems?

- Who owns data quality?

If you can’t answer those, AI won’t help. It’ll amplify the confusion.

Why This Keeps Happening

The AI hype cycle is partly to blame. But there’s something structural too.

When PE firms acquire companies, diligence validates that data exists. Systems exist. Reporting exists. Boxes get checked.

What diligence doesn’t validate is whether that data is organized, whether definitions are consistent, or whether anyone is actually using it to make decisions.

I’ve seen this firsthand. A company passes diligence with a “state-of-the-art data warehouse.” Month 3 post-close, new leadership tries to pull performance reports. Nothing makes sense. Table structures are a mess. Data types don’t match. Business logic doesn’t reflect how the operation actually works.

The warehouse technically functioned. It ingested data without crashing. Nobody had been using it for decisions, so nobody knew it was broken.

Most inherited systems pass the existence test but fail the utility test.

Now layer AI on top. The AI doesn’t know the data is broken. It treats garbage as ground truth and produces outputs that look sophisticated but are fundamentally unreliable.

Dan Gaspar, Partner at TZP Group: “You can’t improve what you can’t measure.”

And you can’t measure what you haven’t defined. And you can’t define what’s buried in disconnected, unvalidated systems.

The Lean Six Sigma Warning

Mooney sees a cautionary parallel: “You’ve got to be careful because you can fall into what happened with Lean Six Sigma—you can have a lot of individual projects that are disconnected. Maybe they’re all doing things that are individually interesting, but you kind of lose calibration on what you’re really trying to solve.”

This is what AI pilot purgatory looks like.

A machine learning project here. A chatbot there. An automation initiative somewhere else. Each one individually interesting. None connected to a coherent strategy. None built on a foundation that can support the weight.

The companies getting AI right aren’t rushing to implement. They’re slowing down long enough to get the basics right first.

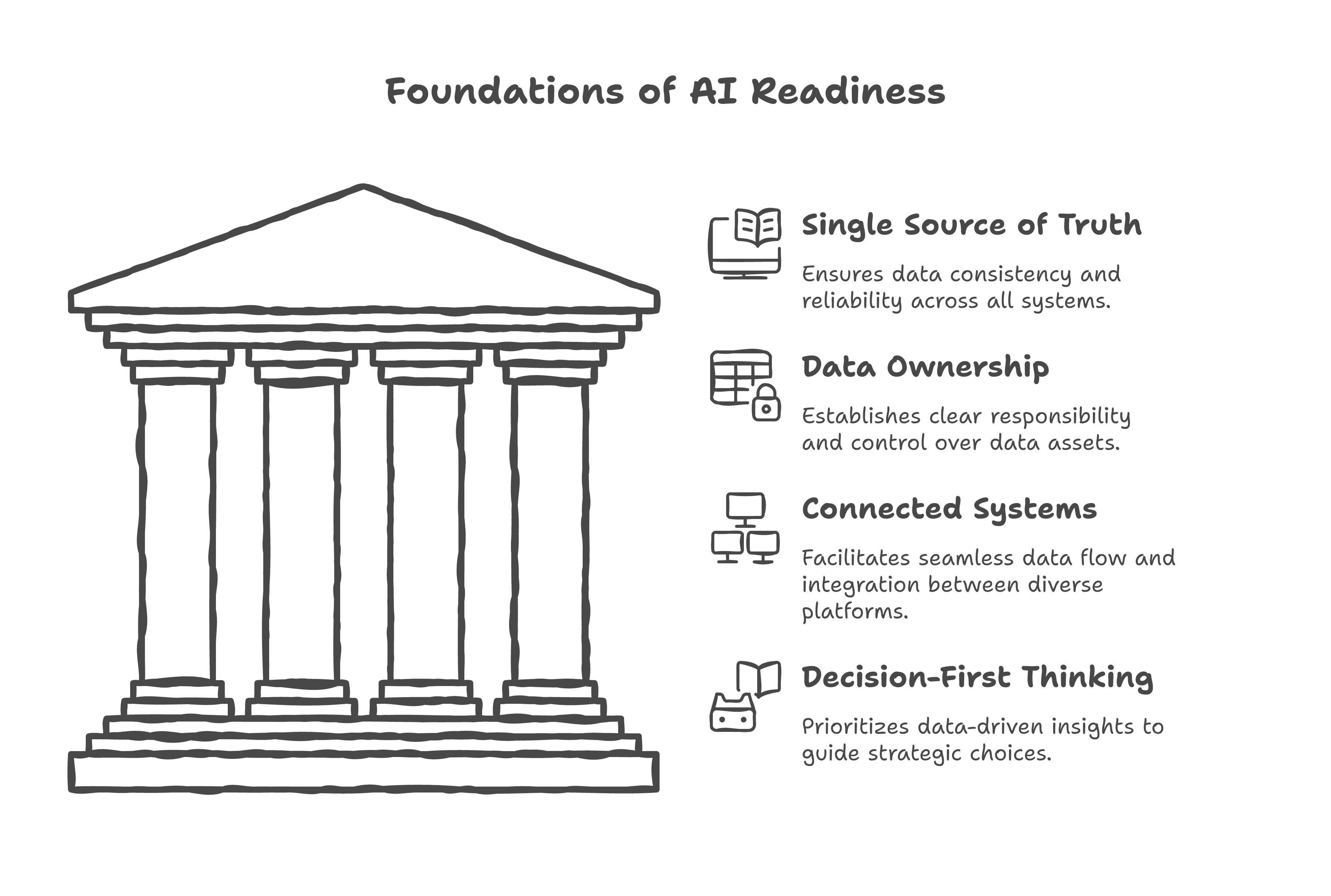

What Has to Happen First: Four Data Foundations

Before your next AI initiative, audit your data foundation. Not whether data exists—whether it’s usable. (An analytics maturity assessment can help identify where you stand.)

Validate your single source of truth. Can you pull consistent numbers for your core metrics? Revenue, margin, retention—whatever matters most. If three departments give you three different answers, you have a definition problem, not an AI opportunity.

Assign ongoing ownership. Mooney makes this point explicitly: “Data deteriorates like an asset in a manufacturing facility.” You wouldn’t buy a plant and assume the equipment maintains itself. Data is the same. Someone has to own quality. Someone has to monitor for drift.

Connect before you compute. Most PE-backed companies operate with data siloed across CRM, ERP, billing, and ops systems. Each has its own definitions, its own cadences, its own quirks. AI that only sees one slice produces insights that only reflect one slice. The work of connecting sources is tedious. It’s also essential.

Start with decisions, not tools. The question isn’t “Should we use AI?” It’s “What decisions are we trying to make better?” Start there. Work backward to what data you’d need. Assess whether it exists in usable form. Then evaluate tools.

Most companies do this backward. They implement AI because they’re supposed to, then look for problems it can solve. That’s how you end up with expensive pilots that never scale.

The Payoff: What Happens When You Get It Right

The data isn’t all cautionary. BluWave’s research shows productivity at PE-backed companies has increased to 4.9%, up from 3.3% a year ago. The firms getting AI right are seeing real gains.

But they’re getting it right because they did the prerequisite work. They organized their data. They established clear definitions. They built infrastructure that makes AI actually useful.

The 86% increase in technology spending isn’t all wasted. The winners are just the ones who invested in foundations before they invested in AI.

The Real Question to Ask Before Any AI Investment

Every portfolio company is going to be asked about their AI strategy. The pressure isn’t going away.

But the companies that actually capture value won’t be the ones with the most AI pilots. They’ll be the ones who can answer a more basic question first: Is your data organized well enough that AI could actually help?

For most companies, the answer is no. That’s the real starting point.

Mooney frames the choice starkly: “You can either run towards the change or you can resist it and suffer.”

He’s right. But running towards AI before your data is ready isn’t running towards change.

It’s running towards expensive confusion.

Alex Escoriaza helps PE-backed companies turn messy data into operational clarity. If you’re being asked about AI strategy but aren’t sure your data can support it, let’s talk.